Guide to Creating Realistic DeepFake Videos with DeepFaceLab! (SAEHD Model)

Deepfake is one of the sexiest technologies of our age, no doubt. How about trying it by yourself?

What Exactly Is This Thing Called Deepfakes?

According to Wikipedia:

Deepfakes are a type of synthetic media in which a person in an existing image or video is replaced with someone else’s likeness using artificial neural networks. They are often produced by combining and superimposing existing media over source media using machine learning techniques known as autoencoders and generative adversarial networks (GANs).

The word Deepfake is derived from the combination of “deep learning” and “fake.”

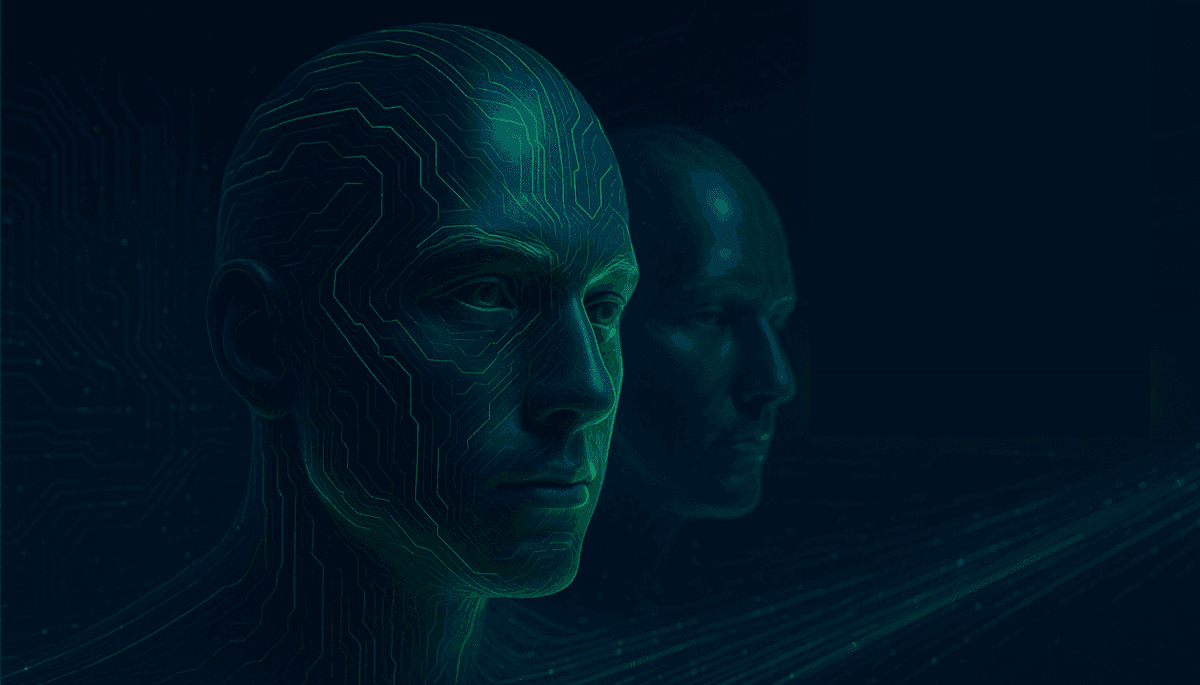

Face Swapping in Action

Here’s an example from Turkey — a frame from a Ziraat Bank commercial.

You can actually do this yourself on your own computer without any coding knowledge at all! Sounds unbelievable, right? Then let’s move on to the tutorial I’ve prepared for you.

Step 1: Downloading the Files

- First, go to the project’s GitHub page via the link I’ve shared.

- Once you scroll down a bit, you’ll find the Releases section. Choose the version that suits your system.

- You don’t need to download every file there — just the one compatible with your GPU is enough. (I learned that the hard way ☺️)

- After downloading the file, double-click and hit Extract — that’s it! You’re now ready to create wonders with this exciting technology.

Step 2: Preparing the Workspace

You’ll be greeted by a screen like this. Get used to it — you’ll be spending a lot of time here. It’ll become your best friend soon enough.

The Workspace folder is where the magic happens — everything starts there.

- Inside the Workspace folder, you’ll find two sample videos named data_dst and data_src. These come by default when you install the program. I started with them too, but later replaced them with different videos for new experiments. So the videos you’ll see in my screenshots won’t be the same as yours.

“data_src” and “data_dst” videos

- data_src (source) → The video that provides the face.

- data_dst (destination) → The video that will receive the face.

Faces will be extracted from data_src and transferred onto the person in data_dst.

When Choosing Your Source and Destination Videos, Keep in Mind:

- Use high-resolution videos.

- The faces should not be too far from the camera.

- Choose clips with varied angles and expressions.

- Ensure good lighting conditions.

- Try to match similar face features (e.g., don’t use a video of a person with glasses and another without).

A Small Note

Always name your working videos data_src and data_dst. You can move the original sample videos to a new folder on your desktop for safekeeping — don’t delete them! It’s useful to keep all your creations organized and revisit them later.

When starting a new project, simply click “Clear Workspace” — this will reset everything for you.

Then, place your new source and destination videos in the Workspace folder and you’re ready to begin.

Step 3: Buckle Up, Let’s Begin!

Follow these steps in order:

- 1. extract images from video data_src (This splits data_src.mp4 into individual frames.)

- Just press Enter several times to keep the default settings.

When it says “Press any key to continue,” you can close the window.

- 2. extract images from video data_dst FULL FPS (Now do the same for data_dst.mp4.)

- Again, press Enter a few times to use default settings.

- 3. data_src faceset extract (This extracts the faces from data_src.mp4.)

When prompted for Face Type, type “wf”, then continue with the defaults.

- 3.1. data_src view aligned result

Here you can review the extracted faces. Delete any that look distorted, misaligned, or incorrect before moving to training.

- 4. data_dst faceset extract (Same process as step 3, but for data_dst.mp4.)

Again, when asked for Face Type, type “wf” and proceed with defaults.

- 4.1. data_dst view aligned results (Review the destination faces and clean up any bad extractions.)

- 5.XSeg: data_dst mask — edit

This is crucial, folks. In this section, we'll be manually masking some faces. It's crucial to be careful when masking. Masking incorrectly or sloppy will result in a noticeable result. I was a bit sloppy with my masking and included the individual's hair in a few places. This resulted in a rather annoying result. I'll post a photo of it at the end.

The more photos you mask on, the better, but you shouldn't overdo it.

- 5.XSeg. data_src mask — edit

We'll do the same process for our source video this time. It's the same as the previous one.

- 5.XSeg. train

Now, let's start training our XSeg model. When it asks you the Facetype question, type "wf" again. Press Enter to start the process. The exciting part begins!

First, let's agree on this:

The More Iterations = The Better the Result.

My recommendation is a minimum of 10,000 Iterations. Of course, more is possible.

Okay, once you're satisfied, you can press "Enter" to complete the model's training. Let's continue.

- 5.XSeg) data_dst trained mask — apply (We apply our trained model)

- 5.XSeg) data_src trained mask — apply (We apply our trained model)

- 5.XSeg) data_src mask — edit (We check the result, we re-mask the bad results to get the best output)

- 5.XSeg) data_dst mask — edit (We check the result, we re-mask the bad results to get the best output)

- 5. XSeg) train (After making the corrections, we train some more. If you haven't made any corrections, you don't need to train again.)

- 5. XSeg) data_dst trained mask — apply (This time, we apply the retrained version. If you noticed, it's the same step as before.)

- 5. XSeg) data_src trained mask — apply (This time, we apply the retrained version. If you noticed, it's the same step as before.)

Now the magic begins!

For the final step, we select:

- Train 6 SAEHD

and begin training our model. Aim for a minimum of 100,000 iterations. The more, the better. You can do 500,000 or 1,000,000 iterations. But for good results, the more, the better! When you're satisfied, press "Enter" and complete the training.

Your system will be under a bit of stress at this stage. Your computer will be overheating. While the process was going on, I went and bought a laptop cooler for myself. I'll show you how much strain it's put on your system with a visual. You're getting every penny of the money you spent on your computer during this process! 😀

Graphics Card

Processor

Step 4: Towards the Finale

Well, my dear friend, we've swam and swam and we've reached the tail.

- 7. Merge SAEHD

and an interactive screen appears.

You can choose the most visually appealing result by pressing options like Q-A / W-S / E-D / R-F / T-G / Y-H-N / U-J. You can switch between screens by pressing the TAB key. Once you're done, you can apply these settings to the previous and next frames by pressing Shift + M and Shift + ?. It's more beneficial to mix and match the options and try them one by one.

Once you've achieved your result, be sure to press "C" to apply "Color Transfer Mode." You can manipulate it however you like. Remember, this is where you create your own magic and has a significant impact on how realistic your results will be.

In this example, you can see the results of incorrect masking, folks. If the masking process had been done more thoroughly, I would have achieved incredibly realistic results this time. But I've done it for you beforehand so you don't make the same mistakes. 🙂

Step 5: The Grand Finale!

- 8. Click "Merged to mp4" to get your video. The result will be waiting for you in your "Workspace" folder under the name "result.mp4." You can share your results with your friends and loved ones, online, or with me!

I hope this was a helpful tutorial for you. You can send me your questions 24/7, and I'll answer them individually when I'm available.

I also share my social media accounts with you; you can reach me wherever you like. See you in my next post! 💚